Researcher Contact

Benjamin Morillon

Chercheur Inserm

Unité 1106 Institut de neurosciences des systèmes – INS Équipe « Dynamique des processus cognitifs »

+33 (0)4 91 38 55 77

Photo by

When it comes to recognizing a melody or understanding a spoken sentence, the human brain does not mobilize its hemispheres in an equivalent way. Although the concept is recognized by scientists, there had been no physiological or neural explanation for the phenomenon until now. A team co-led by Inserm researcher Benjamin Morillon at the Institute of Systems Neuroscience (Inserm/Aix-Marseille Université) in collaboration with researchers at Montreal Neurological Institute and Hospital of McGill University has been able to show that, due to different receptivities to the components of sound, the left auditory cortex neurons participate in the recognition of speech, whereas the right auditory cortex neurons participate in that of music. These findings, to be published in the journal Science, suggest that the respective specializations of the brain hemispheres for music and speech enable the nervous system to optimize the processing of sound signals for communication purposes.

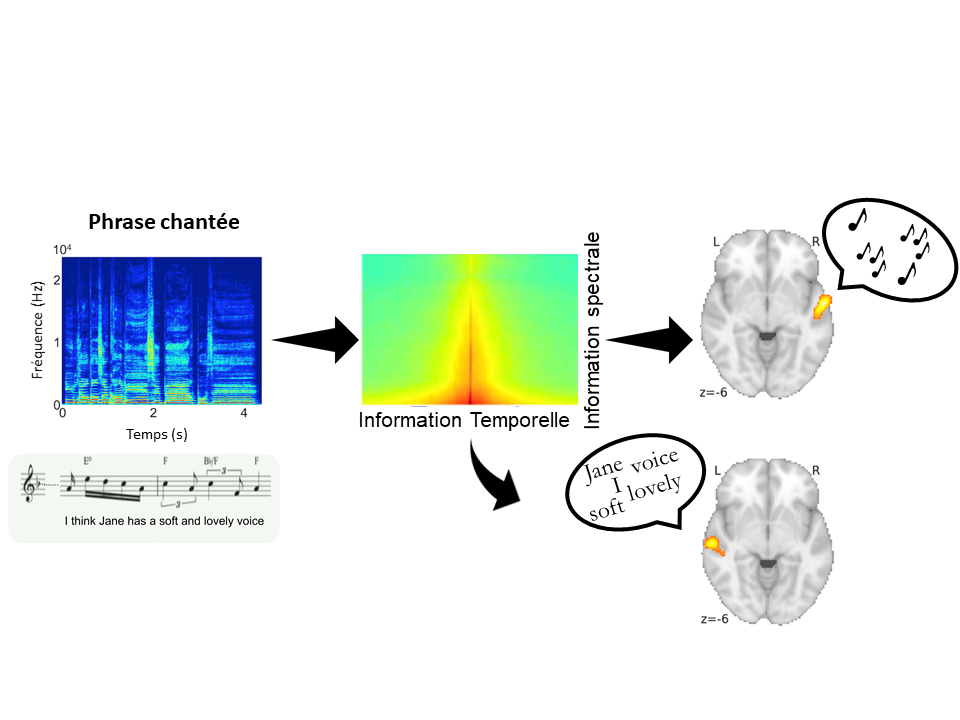

Sound is produced from a complex set of air vibrations, which, when reaching the cochlea of the inner ear, are distinguished according to their speed. At any given moment, slow vibrations are being translated into deep sounds and rapid vibrations into high-pitched ones. As a result, sound can be represented according to two dimensions: spectral (frequency) and temporal (time).

These two auditory dimensions are fundamental because it is their simultaneous combination that stimulates the neurons of the auditory cortex. The latter are thought to discriminate sounds that are relevant for individuals, such as those used for communication enabling people to talk to and understand each other.

A team co-led by Inserm researcher Benjamin Morillon at the Institute of Systems Neuroscience (Inserm/Aix-Marseille Université) in collaboration with researchers at Montreal Neurological Institute and Hospital of McGill University used an innovative approach to understand how speech and music are decoded within each of the human brain hemispheres.

The researchers recorded 10 sentences sung by a soprano to 10 new melodies composed especially for the experiment. These 100 recordings, in which melody and speech are dissociated, were then distorted by decreasing the amount of information present in each dimension of the sound. Forty-nine participants were asked to listen to pairs of these distorted recordings, and to determine whether they were identical in terms of speech content and melody. The experiment was conducted in French and English speakers to see whether the results were reproducible in different languages.

A demonstration of the audio test proposed to the participants is available here:

https://www.zlab.mcgill.ca/spectro temporal modulations/

The research team found that for both languages, when the temporal information was distorted, participants had trouble distinguishing the speech content, but not the melody. Conversely, when the spectral information was distorted, they had trouble distinguishing the melody, but not the speech.

Functional magnetic resonance imaging (fMRI) of the participants’ neural activity showed that in the left auditory cortex the activity varied according to the sentence presented but remained relatively stable from one melody to another, whereas in the right auditory cortex the activity varied according to the melody presented but remained relatively stable from one sentence to another.

What is more, they found that degradation of the temporal information affected only the neural activity in the left auditory cortex, whereas degradation of the spectral information only affected neural activity in the right auditory cortex. Finally, the participants’ performance in the recognition task could be predicted simply by observing the neural activity of these two areas.

Original a capella extract (bottom left) and its spectrogram (above, in blue) broken down according to the amount of spectral and temporal information (center). The right and left cerebral auditory cortexes (right) decode melody and speech respectively.

“These findings indicate that in each brain hemisphere, neural activity depends on the type of sound information, specifies Morillon. While temporal information is secondary for recognizing a melody, it is essential for the correct recognition of speech. Conversely, while spectral information is secondary for recognizing speech, it is essential for recognizing a melody. “

The neurons in the left auditory cortex are therefore considered to be primarily receptive to speech thanks to their superior temporal information processing capacity, whereas those in the right auditory cortex are considered to be receptive to music thanks to their superior spectral information processing capacity. “Hemispheric specialization could be the nervous system’s way of optimizing the respective processing of the two communication sound signals that are speech and music,” concludes Morillon.

Benjamin Morillon

Chercheur Inserm

Unité 1106 Institut de neurosciences des systèmes – INS Équipe « Dynamique des processus cognitifs »

+33 (0)4 91 38 55 77

Distinct sensitivity to spectrotemporal modulation supports brain asymmetry for speech and melody Philippe Albouy1,2,3*, Lucas Benjamin1, Benjamin Morillon4!, and Robert J. Zatorre1,2† 1 Cognitive Neuroscience Unit, Montreal Neurological Institute, McGill 5 University, Montreal, Canada 2 International Laboratory for Brain, Music and Sound Research (BRAMS); Centre for Research in Brain, Language and Music; Centre for Interdisciplinary Research in Music, Media, and Technology, Montreal, Canada 3 CERVO Brain Research Centre, School of Psychology, Laval University, Quebec, Canada 4 Aix Marseille Univ, Inserm, INS, Inst Neurosci Syst, Marseille, France *Corresponding author † Equal contributions Science: https://science.sciencemap.orp/cpi/doi/10.1126/science.aaz3468