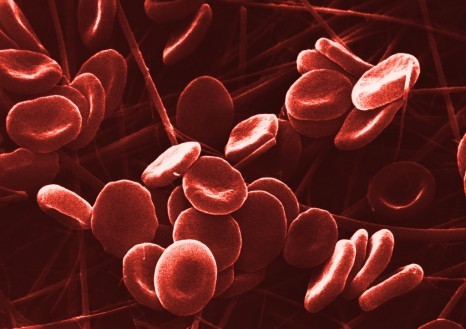

Emulsifiers are among the most commonly used additives. They are often added to processed and packaged foods such as certain industrial cakes, biscuits and desserts, as well as yoghurts, ice creams… © Kenta Kikuchi sur Unsplash

Emulsifiers are among the most commonly used additives. They are often added to processed and packaged foods such as certain industrial cakes, biscuits and desserts, as well as yoghurts, ice creams… © Kenta Kikuchi sur Unsplash

Emulsifiers are among the additives most widely used by the food industry, helping to improve the texture of food products and extend their shelf life. Researchers from Inserm, INRAE, Université Sorbonne Paris Nord, Université Paris Cité and Cnam, as part of the Nutritional Epidemiology Research Team (CRESS-EREN), studied the possible links between the dietary intake of food additive emulsifiers and the onset of type 2 diabetes between 2009 and 2023. They analysed the dietary and health data of 104 139 adults participating in the French NutriNet-Santé cohort study, specifically evaluating their consumption of this type of food additive using dietary surveys conducted every six months. The findings suggest an association between the chronic consumption of certain emulsifier additives and a higher risk of diabetes. The study is published in Lancet Diabetes & Endocrinology.

In Europe and North America, 30 to 60% of dietary energy intake in adults comes from ultra-processed foods. An increasing number of epidemiological studies suggest a link between higher consumption levels of ultra-processed foods with higher risks of diabetes and other metabolic disorders.

Emulsifiers are among the most commonly used additives. They are often added to processed and packaged foods such as certain industrial cakes, biscuits and desserts, as well as yoghurts, ice creams, chocolate bars, industrial breads, margarines and ready-to-eat or ready-to-heat meals, in order to improve their appearance, taste and texture and lengthen shelf life. These emulsifiers include for instance mono- and diglycerides of fatty acids, carrageenans, modified starches, lecithins, phosphates, celluloses, gums and pectins.

As with all food additives, the safety of emulsifiers had been previously evaluated by food safety and health agencies based on the scientific evidence that was available at the time of their evaluation. However, some recent studies suggest that emulsifiers may disrupt the gut microbiota and increase the risk of inflammation and metabolic disruption, potentially leading to insulin resistance and the development of diabetes.

For more information: read Inserm’s report on type 2 diabetes

For the first time worldwide, a team of researchers in France has studied the relationships between the dietary intakes of emulsifiers, assessed over a follow-up period of maximum 14 years, and the risk of developing type 2 diabetes in a large study in the general population.

The results are based on the analysis of data from 104 139 adults in France (average age 43 years; 79% women) who participated in the NutriNet-Santé web-cohort study (see box below) between 2009 and 2023.

The participants completed at least two days of dietary records, collecting detailed information on all foods and drinks consumed and their commercial brands (in the case of industrial products). These dietary records were repeated every six months for 14 years, and were matched against databases in order to identify the presence and amount of food additives (including emulsifiers) in the products consumed. Laboratory assays were also performed in order to provide quantitative data. This allowed a measurement of chronic exposure to these emulsifiers over time.

During follow-up, participants reported the development of diabetes (1056 cases diagnosed), and reports were validated using a multi-source strategy (including data on diabetes medication use). Several well-known risk factors for diabetes, including age, sex, weight (BMI), educational level, family history, smoking, alcohol and levels of physical activity, as well as the overall nutritional quality of the diet (including sugar intake) were taken into account in the analysis.

After an average follow-up of seven years, the researchers observed that chronic exposure – evaluated by repeated data – to the following emulsifiers was associated with an increased risk of type 2 diabetes:

- carrageenans (total carrageenans and E407; 3% increased risk per increment of 100 mg per day)

- tripotassium phosphate (E340; 15% increased risk per increment of 500 mg per day)

- mono- and diacetyltartaric acid esters of mono- and diglycerides of fatty acids (E472e; 4% increased risk per increment of 100 mg per day)

- sodium citrate (E331; 4% increased risk per increment of 500 mg per day)

- guar gum (E412; 11% increased risk per increment of 500 mg per day)

- gum arabic (E414; 3% increased risk per increment of 1000 mg per day)

- xanthan gum (E415; 8% increased risk per increment of 500 mg per day)

This study constitutes an initial exploration of these relationships, and further investigations are now needed to establish causal links. The researchers mentioned several limitations of their study, such as the predominance of women in the sample, a higher level of education than the general population, and generally more health-promoting behaviours among the NutriNet-Santé study participants. Therefore caution is needed when extrapolating the conclusions to the entire French population.

The study is nevertheless based on a large sample size, and the researchers have accounted for a large number of factors that could have led to confounding bias. They also used unique, detailed data on exposure to food additives, down to the commercial brand name of the industrial products consumed. In addition, the results remain consistent through various sensitivity analyses[1], which reinforces their reliability.

‘These findings are issued from a single observational study for the moment, and cannot be used on their own to establish a causal relationship. They need to be replicated in other epidemiological studies worldwide, and supplemented with toxicological and interventional experimental studies, to further inform the mechanisms linking these food additive emulsifiers and the onset of type 2 diabetes. However, our results represent key elements to enrich the debate on re-evaluating the regulations around the use of additives in the food industry, in order to better protect consumers,’ explain Mathilde Touvier, Research Director at Inserm, and Bernard Srour, Junior Professor at INRAE, lead authors of the study.

Among the next steps, the research team will be looking at variations in certain blood markers and the gut microbiota linked to the consumption of these additives, to better understand the underlying mechanisms. The researchers will also look at the health impact of additive mixtures and their potential ‘cocktail effects.’ They will also work in collaboration with toxicologists to test the impact of these exposures in in vitro and in vivo experiments, to gather more arguments in favour of a causal link.

NutriNet-Santé is a public health study coordinated by the Nutritional Epidemiology Research Team (CRESS-EREN, Inserm/INRAE/Cnam/Université Sorbonne Paris Nord/Université Paris Cité) which, thanks to the commitment and loyalty of over 170 000 participants (known as Nutrinautes), advances research into the links between nutrition (diet, physical activity, nutritional status) and health. Launched in 2009, the study has already given rise to over 270 international scientific publications. In France, a call to recruit new participants is still ongoing in order to continue to further public research into the relationship between nutrition and health.

By devoting a few minutes per month to answering questionnaires on diet, physical activity and health through the secure online platform etude-nutrinet-sante.fr, the participants contribute to furthering knowledge, towards a healthy and more sustainable diet.

[1] Sensitivity analyses in epidemiology aim to test the robustness of statistical models by varying certain parameters, hypotheses or variables in the model to assess the stability of the associations observed. For example, in this study, additional account was taken of sweetener consumption, weight gain during follow-up and other metabolic diseases.

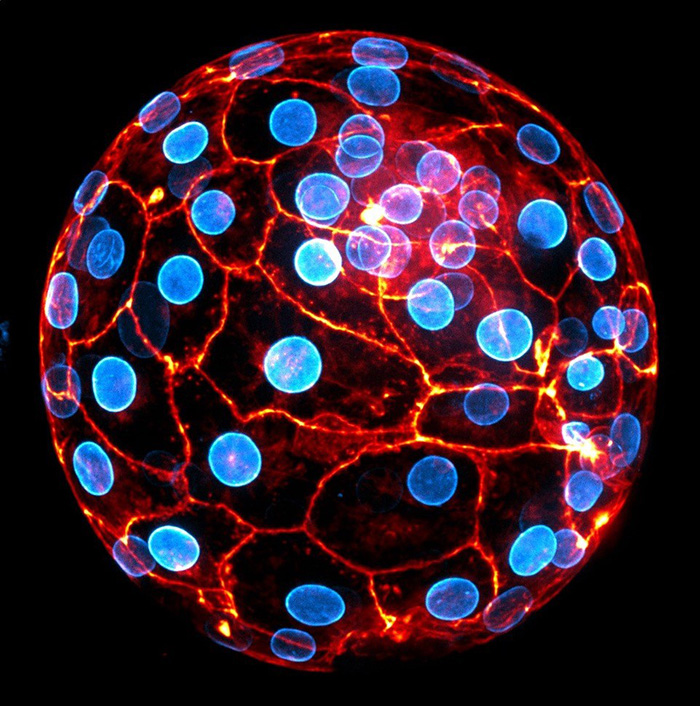

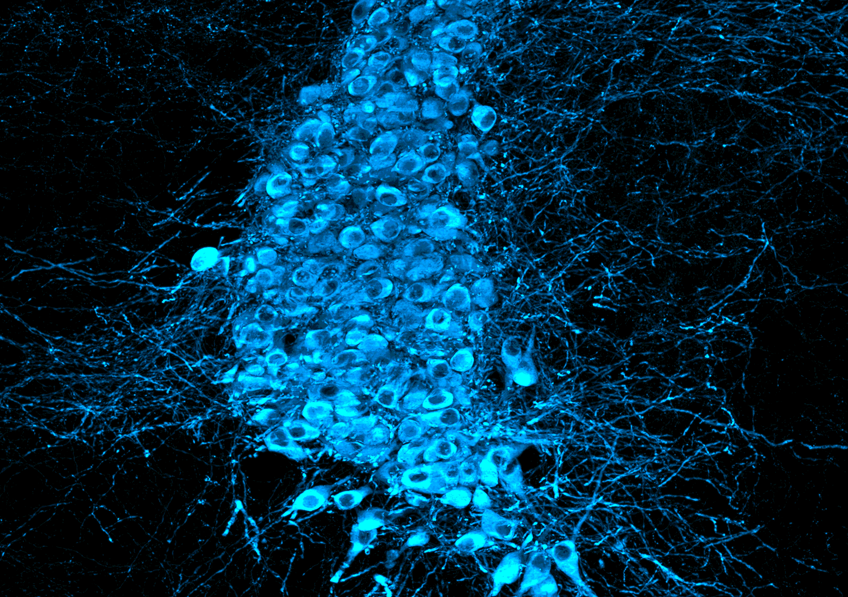

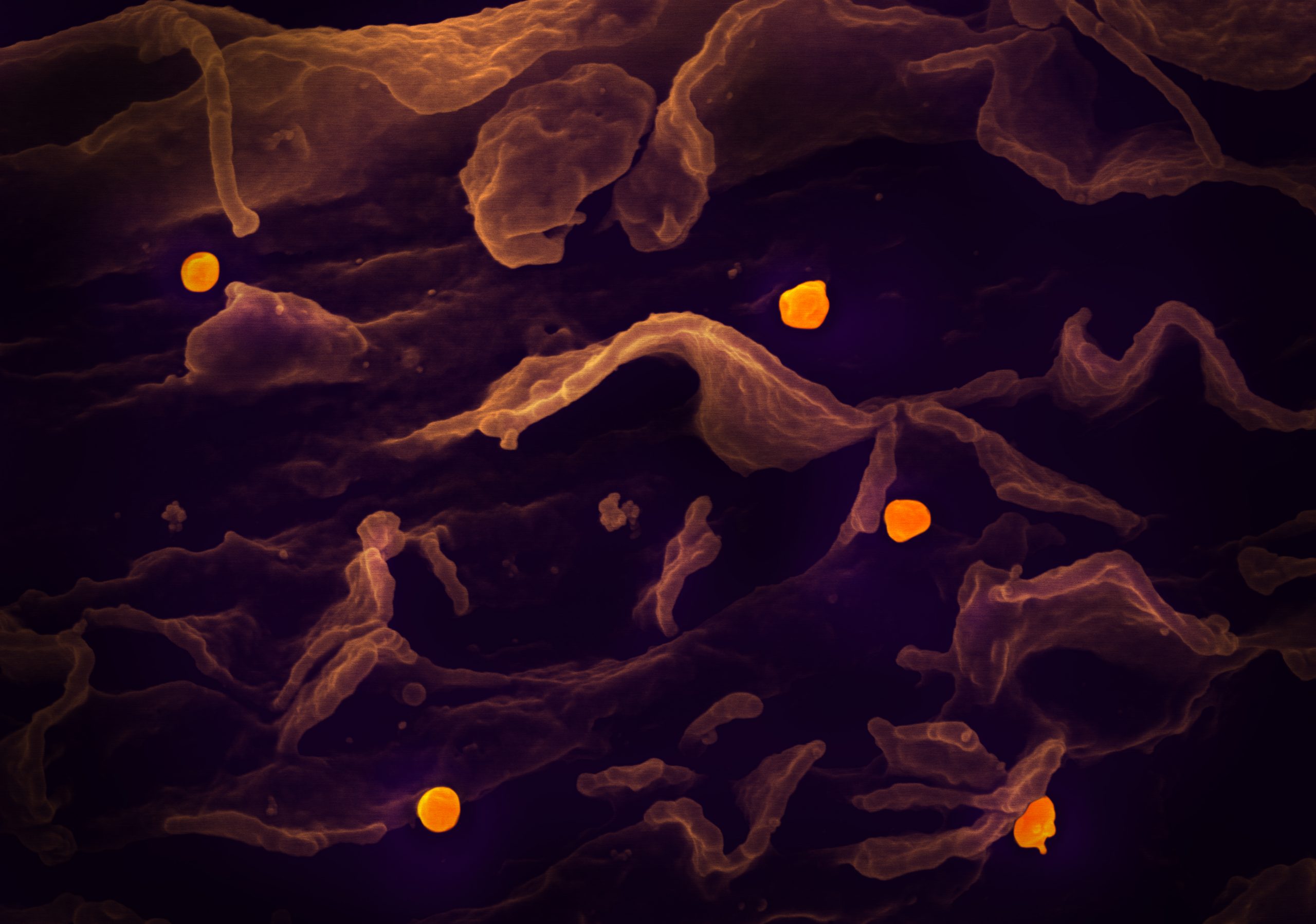

Human embryo at the blastocyst stage ready to implant. The nuclear envelope of the cells appears in blue and the actin cytoskeleton in orange. © Julie Firmin et Jean-Léon Maître (Institut Curie, Université PSL, CNRS UMR3215, INSERM U934)

Human embryo at the blastocyst stage ready to implant. The nuclear envelope of the cells appears in blue and the actin cytoskeleton in orange. © Julie Firmin et Jean-Léon Maître (Institut Curie, Université PSL, CNRS UMR3215, INSERM U934)

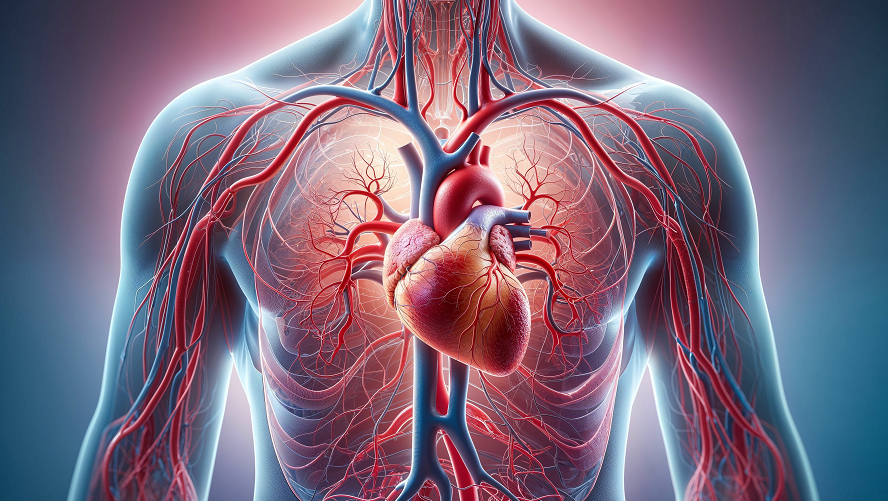

© Adobe stock

© Adobe stock

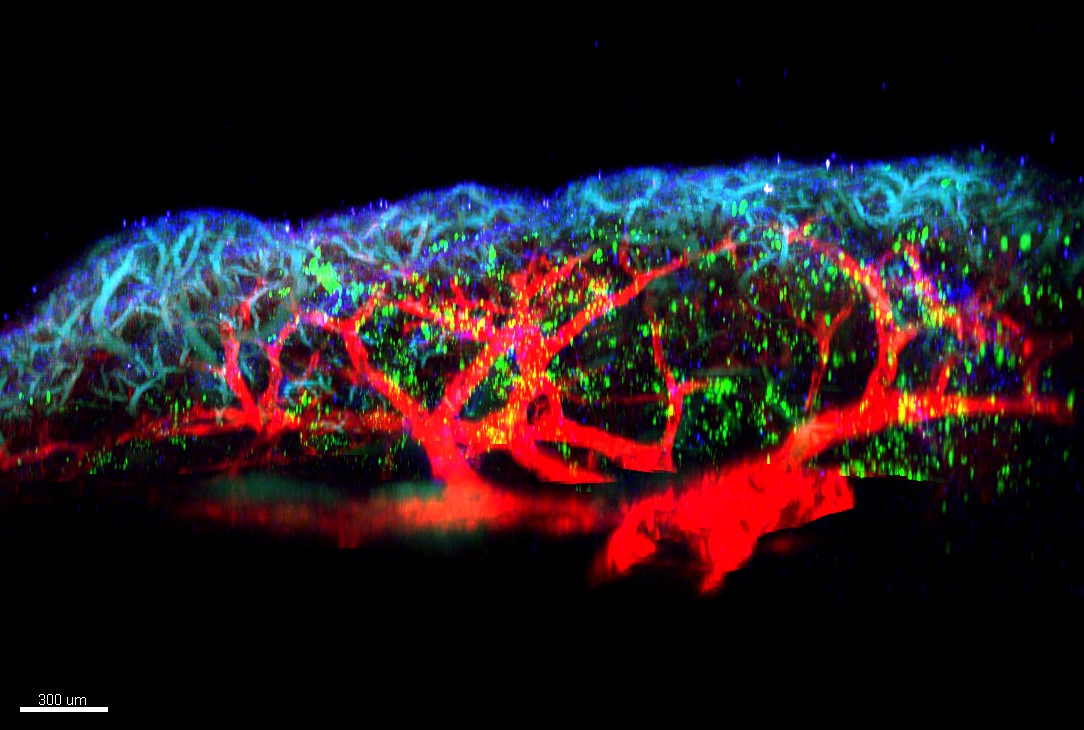

© AdobeStock

© AdobeStock